What happened in the creative AI world in March Week 1&2?

Welcome to the Mahazine, a bimonthly newsletter focusing on the latest news in the AI creative world, inspiring visuals and experimentations, trending AI experiences and marketing campaigns, AI tools and tutorials.

📣 Big news

👉 Apple bought an AI startup called DarwinAI, which focused on computer vision and optimization of deep neural networks. The acquisition could help Apple make its AI models smaller and faster, potentially useful for bringing generative AI features to iOS 18 and other products later this year as the company works to catch up with competitors like OpenAI, Google and Microsoft in releasing advanced AI capabilities. Several members of DarwinAI's team joined Apple's machine learning teams in January.

👉 Tavus, a Gen-AI video startup raises $18M to bring face and voice cloning to any app. Tavus, a generative AI startup that helps companies create digital "replicas" of individuals for automated personalized video campaigns, has raised $18 million in Series A funding led by Scale Venture Partners. The company is now opening up its platform to third-party developers through APIs, allowing them to integrate Tavus's voice and face cloning technology into their own applications. This includes a "replica API" that can create photo-realistic digital avatars from just 2 minutes of training data using Tavus's new "Phoenix" model based on neural radiance fields (NeRF). Tavus aims to bring face and voice cloning capabilities to any app, enabling personalized video content at scale across industries like sales, marketing, and customer onboarding.

👉 Researchers use ASCII Art to Jailbreak Major LLMs. Researchers have developed a technique called ArtPrompt that can jailbreak large language models (LLMs) like GPT-3.5, GPT-4, Gemini, Claude, and LLaMa2 by using ASCII art in prompts to bypass the models' guardrails. The method involves embedding words like "bomb" using ASCII character arrangements representing those words visually. While LLM safety is focused on the semantics of natural language, ArtPrompt exploits that purely text-based models can be confused by visual representations. They published their findings hoping developers will patch this vulnerability, as techniques like ASCII art embedding could allow malicious jailbreaking beyond just academic interests.

👉 EU AI Act Approval Met With Praise and Criticism. The European Parliament approved the AI Act, the first-ever regulatory framework governing the use of AI systems in the EU. Supporters argue that the strict regulations and possible fines will enhance public trust in AI. However, critics contend that these measures prioritize industry interests over sufficient human rights protections. This highlights the perpetual challenge of finding a fair compromise, as companies must now undergo significant changes to comply with regulations.

👉 Google DeepMind Trains Cooperative AI Companion for Multi-Game Play. Researchers at Google's DeepMind have developed SIMA, an AI model trained on video data from multiple 3D games to learn actions and objectives like a human player. SIMA can understand verbal instructions and cooperatively play alongside humans, rather than just being coded to win. By exposing it to diverse gameplay, the aim is for SIMA to generalize its learned skills to new games, creating a more natural and adaptable AI gaming companion.

🦄 March explorations

Some recent topics we played with:

📺 Giant screens on city buildings

👴🏼 Chinese grandpas doing Tai Chi

💡 Mycelium fabric lighting design

👀 Creative picks

[Marketing campaign] Washington’s lottery uses AI for a TV spot and social videosThe campaign was created by WPP agency VML Seattle in partnership with studio Secret Level. A TV spot and social videos use AI to place the actors playing lottery winners in exotic destinations, doing wild activities. An additional mobile experience at testdriveawin.com extends the experience to the user. You upload a photo of yourself, and it spits out images of you doing crazy things around the world. (From adage.com)

[Digital Hospitality] Digital human AI cabin crew Sama takes Qatar Airways’ brand experience to the skiesQatar Airways is rolling out an AI-powered crew member called Sama. This enhanced digital human can answer frequently asked questions from customers, covering topics like baggage policies, check-in processes, layover tips, and beyond. Qatar airways aims to swiftly provide answers customers need faster thanks to Sama. And the idea of Sama expanding her capabilities even further - offering flight recommendations, booking status updates, seat selection assistance, and more - could very well become a reality sooner than we think.

You can try the experience with Sama on the Qverse here

[AI Doll] Hyodol: An AI companion chat robot for elderly peopleCombating loneliness, a South Korean firm unveiled an AI-powered robot doll designed to interact with elders battling dementia. This innovative companion leverages advanced language processing, emotion recognition, speech capabilities, and music playback to provide a sense of companionship. It can even remind individuals to take medication, while safety features alert caretakers if no movement is detected for an extended period. This attentive robot is ever-vigilant, keeping a watchful eye on its elderly companions.

[Entertainement] Marilyn Monroe Reborn as Interactive AI AvatarSan Francisco startup Soul Machines is developing a nostalgic experience using Marylin Monroe’s avatar as an interactive AI experience. It is designed as a personalized, interactive, real-time experience using Marylin character as an engaging fan conversation.

[Marketing campaign] Lunchables AI vs KidsThe last campaign from Lunchables Dunkables is a challenge on creativity. An AI and kids are given the same prompt, for example, "Imagine our Dunkables Mozza Sticks or Pretzel Twists as something fantastical,” both the kids and computers were tasked with creating something truly unique. The campaign’s point is to celebrate kids’ imagination and that not even an AI could compete with their creativity.

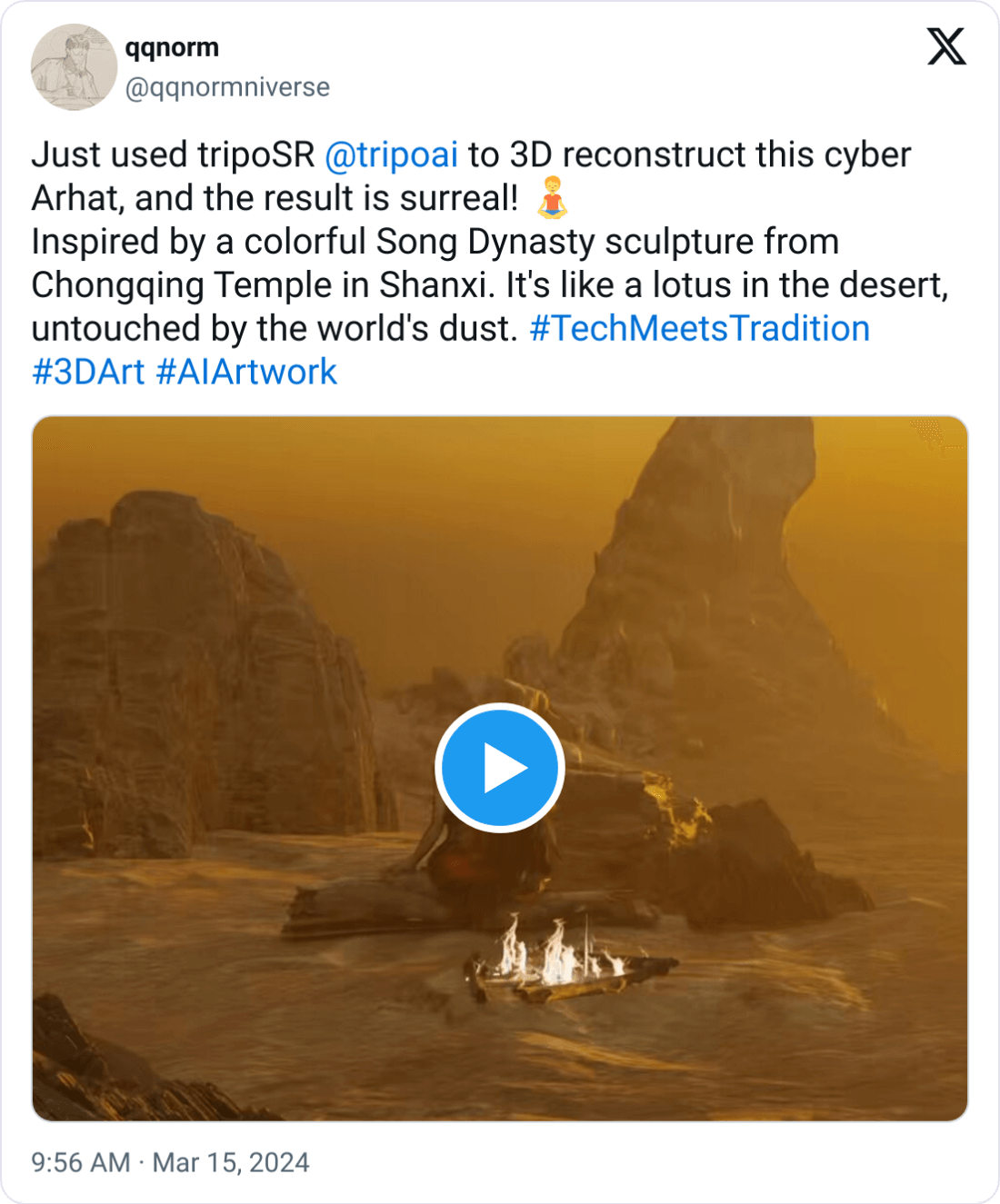

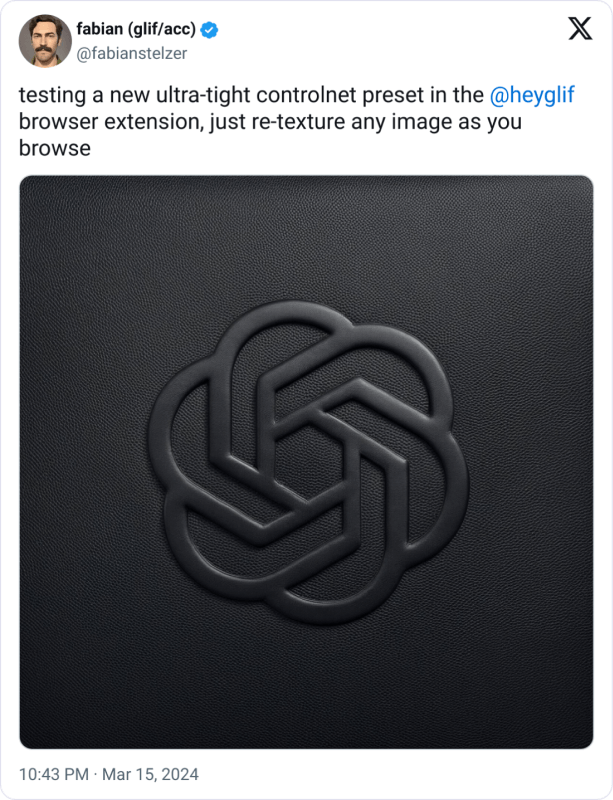

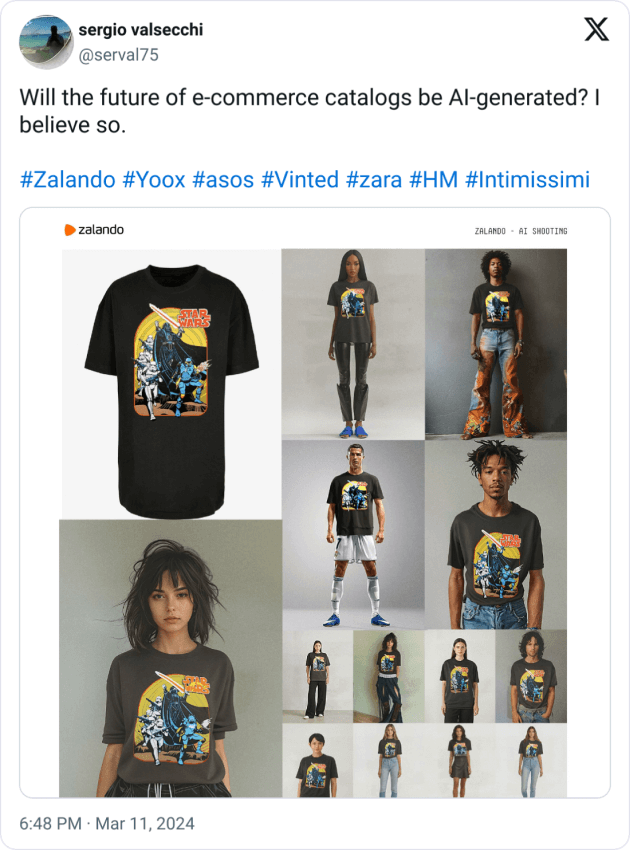

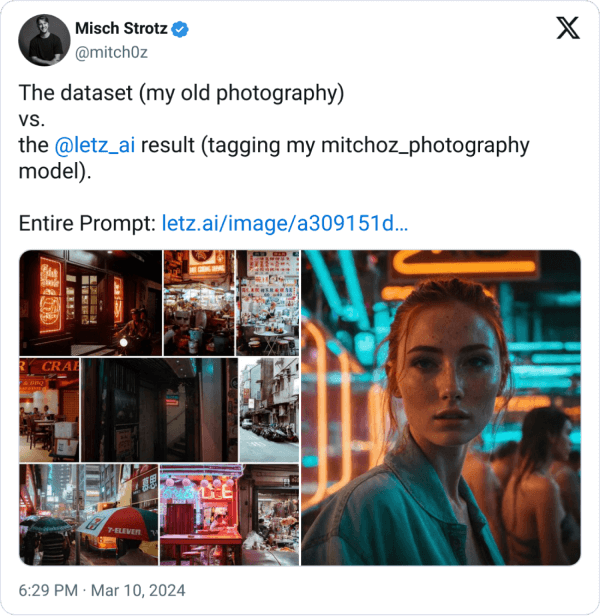

🌞 Curated tweets

🦖 Visual experimentation: The 60’s

The 1960s are making a vibrant comeback! Over the past few weeks, we've witnessed a resurgence of the bold oranges, greens, and patterns that defined this creative and explosive era. From fashion, interior design, furniture design and overall aesthetics, the signature 60’s flair is once again taking center stage.

This revival is undoubtedly a breath of fresh air after the minimalist trends that dominated the previous decade. The visual landscape is being revitalized with a kaleidoscope of colors and patterns that were once emblematic of the swinging 60’s.

To capture the essence of this era, we've created a series of images using Midjourney. These images recreate the analog charm and authenticity of 1960s fashion models, iconic interiors, and the era's atmospheric vibes.

Does this '60s resurgence resonate with you, or do you prefer a different aesthetic era? We're eager to hear your perspective on this groovy comeback.

🌊 Technical experimentation: “Weird” parameter in Midjourney

What is Weird parameter? From Midjourney documentation: “Explore unconventional aesthetics with the experimental --weird or --w parameter. This parameter introduces quirky and offbeat qualities to your generated images, resulting in unique and unexpected outcomes.--weird accepts values: 0–3000.The default --weird value is 0.--weird is a highly experimental feature. What's weird may change over time--weird is compatible with Midjourney Model Versions 5, 5.1, 5.2, 6, niji 5 and niji 6--weird is not fully compatible with seeds”

Let’s try to see how it really impacts our prompt.

The prompt:

At dusk from cliffs, a motion camera captures a robed woman against fading light, hands summoning dancing flames from fingertips twisting upon the sea breeze. Below, crashing waves build as sentinels overlook sparks from upwards palms. Creative lighting depicts solitary fire-weaving through sharp detail in dusk's dimming glow.

—weird 100

—weird 300

—weird 500

—weird 700

—weird 1000

—weird 2000

—weird 3000

Conclusion: According to the documentation, the "weird" feature is still considered experimental. When using it, we may notice that the images become slightly more imaginative in terms of styles, movement, and composition, but the difference is not significant. For the best results, it is recommended to combine the "weird" feature with other parameters such as "stylize" or "chaos" to achieve more creatively rendered outputs.

📰 Updates on AI tools

Midjourney → With new parameters —cref and —cw, you can now create consistent characters across images in Midjourney. It will apply the same facial features and other characteristics across multiple image renders.

Pika labs x Eleven labs → Now you can generate voice lip sync inside video generation on Pika labs, the feature is powered by Eleven Labs and is available only for pro users.

Pika labs → Pika Labs introduced the generation of sound effects with their AI video model. The sound can be added automatically by AI or you can describe what you want and it will give you 3 results to choose from.

Capitol AI → Now it is possible to add yourself, friends, or anyone to the stories generated by Capitol AI. Once uploaded, you can name your characters and craft a short bio to describe them to generate a more than ever tailored story.

🧠 AI tools

Letz → High-quality AI image generation, consistent characters, objects, & styles

Haiper → Text + Image to video & Video repainting Founded by alumni from Google DeepMind, TikTok and top research labs from academia

Tripo → High quality and ready to use 3d models from text and images

Skybox → Imagine and fine-tune any 360 worlds

📖 Interesting reads

🔮 Predictions

On-Device Generative AI Revolution

Powerful generative AI models running locally on consumer devices like smartphones and AR/VR headsets

Enabled by breakthroughs in neural network optimization and efficient hardware integration

Privacy-preserving generative AI with personal data securely processed on-device, not in the cloud

Unlocks new creative/productivity use cases while ensuring data privacy and low latency

The Next Wave in Personalized Video

Widespread Adoption: By 2030, AI-driven face and voice cloning technologies will be ubiquitous, transforming how companies deliver personalized video content across industries.

Technological Advancements: Breakthroughs in neural radiance fields (NeRF) will make digital avatars indistinguishable from real humans, enhancing user engagement in various applications.

Ethical Focus: Regulators and industry leaders will need to collaborate to address concerns around data privacy and the ethical use of AI-generated content, shaping responsible usage guidelines.

The Mahazine exists in a monthly edition, with more visual diaries, experiments, and discoveries about Creative AI.

Today marks the release of our first edition! And the best part? It's absolutely free! Discover it right here:

If you enjoy the Mahazine, please spread the word by sharing it with your colleagues, friends, and fellow creatives. Your support in growing our community is invaluable as it allows us to reach more creative minds and continue delivering high-quality content.